Submissions for IRCEP’s Experimentation and Validation Challenges are open until end of February 2026. Guidelines and Rules that participants need to adhere to can be accessed here.

CODECO Innovation and Research Community Engagement Challenges Webinar

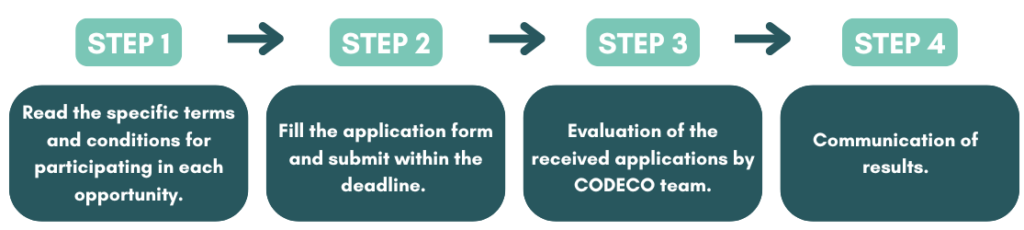

*Instructions for each of the challenges can be found below, as they open for submission.

Open Challenges

CODECO Data Generator #1

UPRC invites participants to take part in the CODECO Experimentation and Validation Challenge, focused on enhancing synthetic data generation for CRD (Common Resource Description) models. Participants will work with version 1 of the Synthetic Data Generator (SDG v1) in combination with the main version of the Data Generator. The objective is to develop an improved version that integrates the synthetic data generation capabilities of SDG v1 with the real CODECO CRDs provided by the main Data Generator. The goal is to produce synthetic data that accurately mirrors the structure and content of real CODECO CRDs. Participants are expected to submit a comprehensive set of results, including validation metrics and supporting evidence, demonstrating the fidelity and effectiveness of the enhanced synthetic data generation process.

CODECO Data Generator #2

In this challenge, participants are invited by UPRC to explore, install, and rigorously evaluate the CODECO Data Generator alongside other comparable workload data generation tools. The objective is to perform a detailed, hands-on comparison that highlights the strengths, weaknesses, and limitations of each tool. Through this process, participants will contribute valuable insights into the performance and capabilities of CODECO within real-world or simulated environments.

CODECO Energy Awareness Strategies

Focus on evaluating the effectiveness of proposed energy-aware scheduling strategies within the CODECO framework. Participants are tasked with comparing their solutions against two key baselines: i) the standard Kubernetes (K8s) scheduler and ii) KEIDS or similar energy-aware scheduling approaches. The challenge, provided by FORTISS, will specifically assess the performance of these strategies across several SMART goals.

CODECO Intelligent Recommender #1: Load balancing with cross-layer RL optimization

This challenge focuses on evaluating the generalization capabilities of the CODECO intelligent recommender by applying its core logic to the critical use case of application workload balancing across a cluster of nodes. The foundation for this work is the established multi-objective Reinforcement Learning (RL) solution, developed by the i2CAT Foundation, which models the task allocation problem using cross-layer performance metrics collected via Prometheus. Participants will be tasked with three key objectives: (i) modeling the load balancing problem using the collected metrics, (ii) fine-tuning the existing RL model to improve the generated recommendations, and (iii) evaluating their optimized solution against the default Kubernetes scheduler.

CODECO Intelligent Recommender #2: Benchmarking across diverse vertical workloads

This challenge is designed to assess the applicability and adaptability of the CODECO intelligent recommender when exposed to varied simulated workloads corresponding to applications from multiple sectors. The challenge leverages the core Reinforcement Learning (RL) model that drives the recommendation engine, coupled with a highly configurable simulated workload generator. Participants are required to: (i) model the resource requirements (such as CPU and RAM usage) for applications mirroring at least two distinct domain verticals, and (ii) evaluate the quality and accuracy of the RL model’s generated recommendations in comparison to a predefined set of baseline experiments.

CODECO Resilience strategies evaluation

Aims to evaluate the resilience strategies integrated into the CODECO framework. Participants will assess the effectiveness of their proposed solutions by comparing them against two key baselines: i) CODECO without resilience and ii) the vanilla Kubernetes (K8s) scheduler. The challenge, proposed by FORTISS, focuses on measuring resilience across several SMART goals.

CODECO Secure Connectivity

In this challenge, participants explore and evaluate the CODECO Secure Connectivity module, focusing on ensuring secure, reliable communication in Edge-Cloud environments. The goal is to assess its strengths and limitations by testing various configurations and strategies, ultimately contributing insights into the module’s performance in real or simulated scenarios.

[CODEF and Benchmarking] Dynamic Stress Testing

In this challenge, coordinated by ATH and UPRC, we aim to rigorously evaluate the resilience and adaptability of CODECO-managed deployments under adverse conditions. Participants will introduce artificial failures and resource bottlenecks using stress testing and chaos engineering tools—such as killing pods, saturating CPUs, or throttling network bandwidth. The objective is to assess how effectively CODECO responds to these disruptions, reconfigures resources, and maintains optimal service levels under pressure.

[CODEF and Benchmarking] Security Mechanisms for CODEF

In this challenge, led by ATH and UPRC, participants will focus on proposing and implementing comprehensive end-to-end security mechanisms for the CODEF framework. The objective is to enhance the security posture of CODEF by designing and deploying robust solutions that protect data, services, and system communications across the entire lifecycle. Participants will evaluate and refine their security strategies to ensure that CODEF is resilient against potential vulnerabilities and attacks, while maintaining the integrity and availability of system operations. This challenge presents a unique opportunity to push the boundaries of security within dynamic, distributed environments.

[CODEF and Benchmarking] Testing, Debugging and AI-powered assistant

In this challenge, spearheaded by ATH and UPRC, participants will explore how the integration of AI/ML can enhance the CODEF framework’s ability to perform testing, live debugging, and self-healing in real-time. The goal is to leverage intelligent automation to improve CODEF’s robustness, enabling faster detection and resolution of issues. By optimizing these capabilities, participants will work towards reducing the Mean Time to Repair (MTTR), ultimately increasing the overall reliability and efficiency of the system. This challenge invites participants to experiment with innovative AI/ML approaches that can transform how CODECO handles system failures and operational anomalies.

Evaluate the CODECO SWM Scheduler

The CODECO Experimentation and Validation Challenge, provided by FORTISS, is designed to assess the performance of CODECO’s graph-based scheduling approach, specifically Seamless Workload Migration (SWM), by comparing it against two key baselines: the vanilla Kubernetes (K8s) scheduler and the Kubernetes network-aware scheduler.